Data engineering is a practice which is focused on designing, building, and maintaining the systems and infrastructure that enable the collection, storage, transformation, and delivery of data for analysis and decision-making. It involves creating reliable data pipelines that extract information from various sources, clean and structure it, and make it accessible in formats suitable for analytics, reporting, and machine learning.

Common use case in data engineering is the full load pattern, an ingestion method that processes and loads the entire dataset during each execution. While effective, this approach can become resource-intensive depending on the size of the data being handled. The full load method is typically applied in scenarios where datasets lack fields or indicators to identify when a record were inserted or last updated, making incremental loading impractical. Although it is among the most straightforward ingestion patterns to implement, the full load approach carries potential pitfalls that require careful planning and consideration to ensure efficiency and reliability.

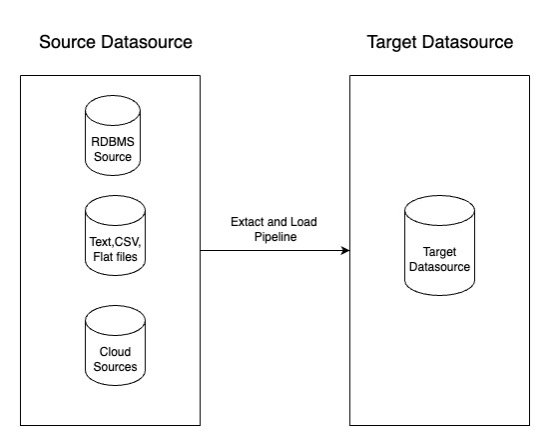

In this scenario, the target data source of the data pipeline requires transformation jobs that depend on additional IOT device information from a third-party data provider. This dataset changes only a few times in a week and contains fewer than one million rows, making it a relatively slow-evolving entity. However, the challenge is that the data provider does not define a “last updated” or “created at” attribute or any time marker to identify which rows have changed since the last ingestion. This forces user to load the full dataset every time rather than loading just the changed dataset. Given these limitations, the Full Loader pattern becomes an ideal solution. Its simplest implementation follows a two-step Extract and Load (EL) process, where native command exports the entire dataset from the source and import it into the target system. This approach works especially well for homogeneous data stores, as no transformation is required during the transfer. Although it may not always be the most efficient method for large, rapidly changing datasets, it is effective for smaller, slowly evolving datasets ensuring completeness and consistency in the absence of change-tracking attributes. If the source and target data stores are of a similar type — for example, migrating data from PostgreSQL to another PostgreSQL database — intermediate transformations are generally unnecessary because the data structures are already aligned. However, when the source and target systems differ in nature, such as transferring data from a relational database (RDBMS) to a NoSQL database, data transformations are typically required to adjust the schema, format, and structure to fit the target environment.

Full Loader implementations are typically designed as batch jobs that run on a regular schedule. When the volume of data grows gradually, this approach works well since the compute resources remain relatively stable and predictable. In such cases, the data loading infrastructure can operate reliably for extended periods without performance concerns.However, challenges arise when dealing with datasets that evolve more dynamically. For instance, if the dataset suddenly doubles in size from one day to the next, relying on static compute resources can cause significant slowdowns or even failures due to hardware limitations. To address this variability, organizations can take advantage of auto-scaling capabilities within their data processing layer. Auto-scaling ensures that additional compute resources are allocated automatically during spikes in data volume, maintaining performance and reliability while optimizing resource usage.

Another important risk associated with the Full Loader pattern is the potential for data consistency issues because the process involves completely overwriting the dataset, a common strategy is to use a truncate and load operation during each run. However, this approach carries significant drawbacks. For example, if the ingestion job executes at the same time as other pipelines or consumers reading the dataset, users may encounter incomplete or missing data while the insert operation is still in progress. To mitigate this, leveraging transactions is the simplest and most effective solution, as they manage data visibility automatically. In cases where the data store does not support transactions, a practical workaround is to use an abstraction layer such as a database view, which allows you to update the underlying structures without exposing incomplete data to consumers.

In addition to concurrency concerns, there is the risk of losing the ability to revert to a previous dataset version if issues occur after a full overwrite. Without versioning or backups, once the data is replaced, the previous state cannot be recovered. To safeguard against this, it is critical to maintain regular dataset backups or implement versioned storage strategies. This ensures that if unexpected problems arise, the system can roll back to a reliable earlier version, preserving both data integrity and operational continuity

Leave a comment